Running Tests for OHIF

We introduce here various test types that is available for OHIF, and how to run each test in order to make sure your contribution hasn't broken any existing functionalities. Idea and philosophy of each testing category is discussed in the second part of this page.

Unit test

To run the unit test:

yarn run test:unit:ci

Note: You should have already installed all the packages with yarn install.

Running unit test will generate a report at the end showing the successful and unsuccessful tests with detailed explanations.

End-to-end test

For running the OHIF e2e test you need to run the following steps:

Open a new terminal, and from the root of the OHIF mono repo, run the following command:

yarn test:dataThis will download the required data to run the e2e tests (it might take a while). The

test:dataonly needs to be run once and checks the data out. Read more about test data below.Run the viewer with e2e config

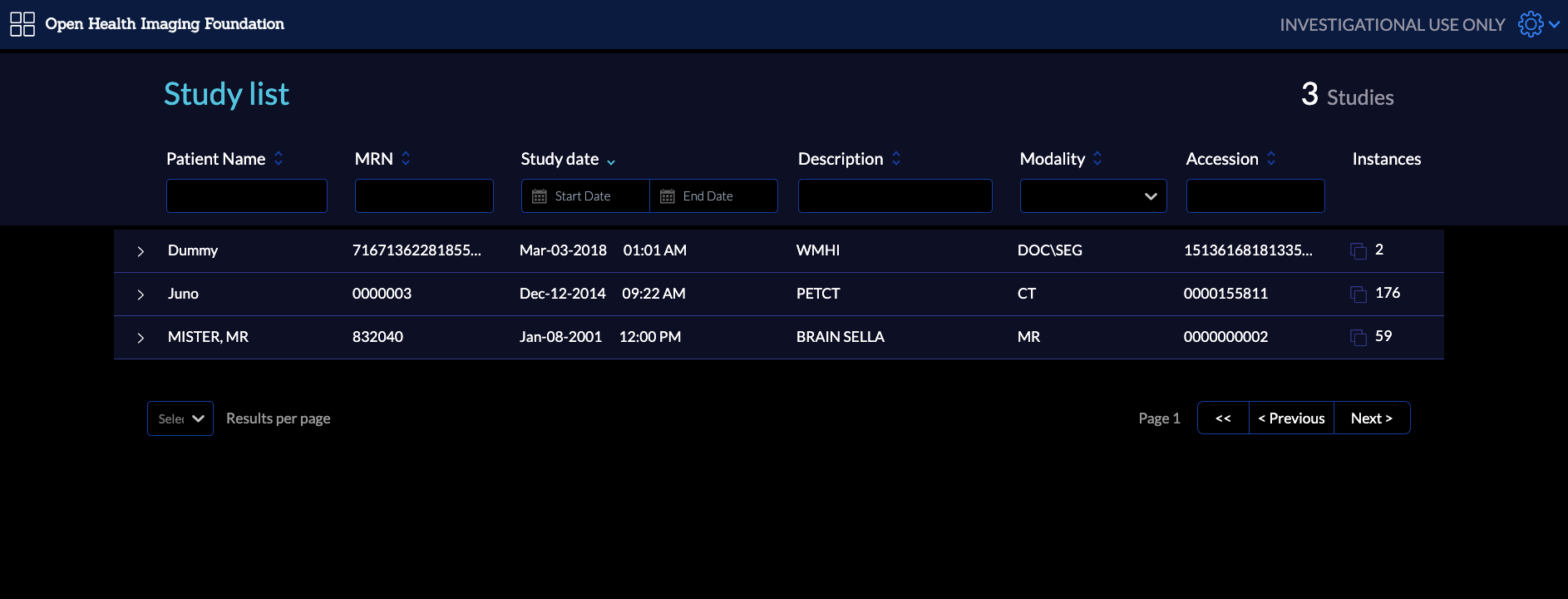

APP_CONFIG=config/e2e.js yarn startYou should be able to see test studies in the study list

Open a new terminal inside the OHIF project, and run the e2e cypress test

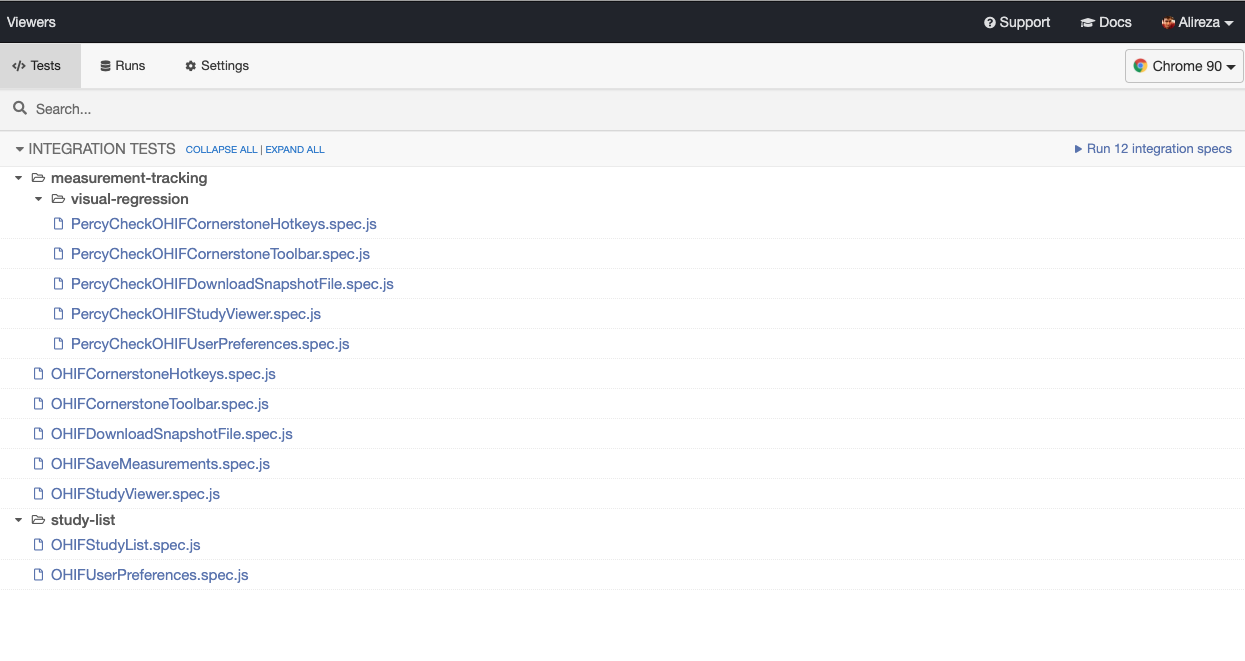

yarn test:e2eYou should be able to see the cypress window open

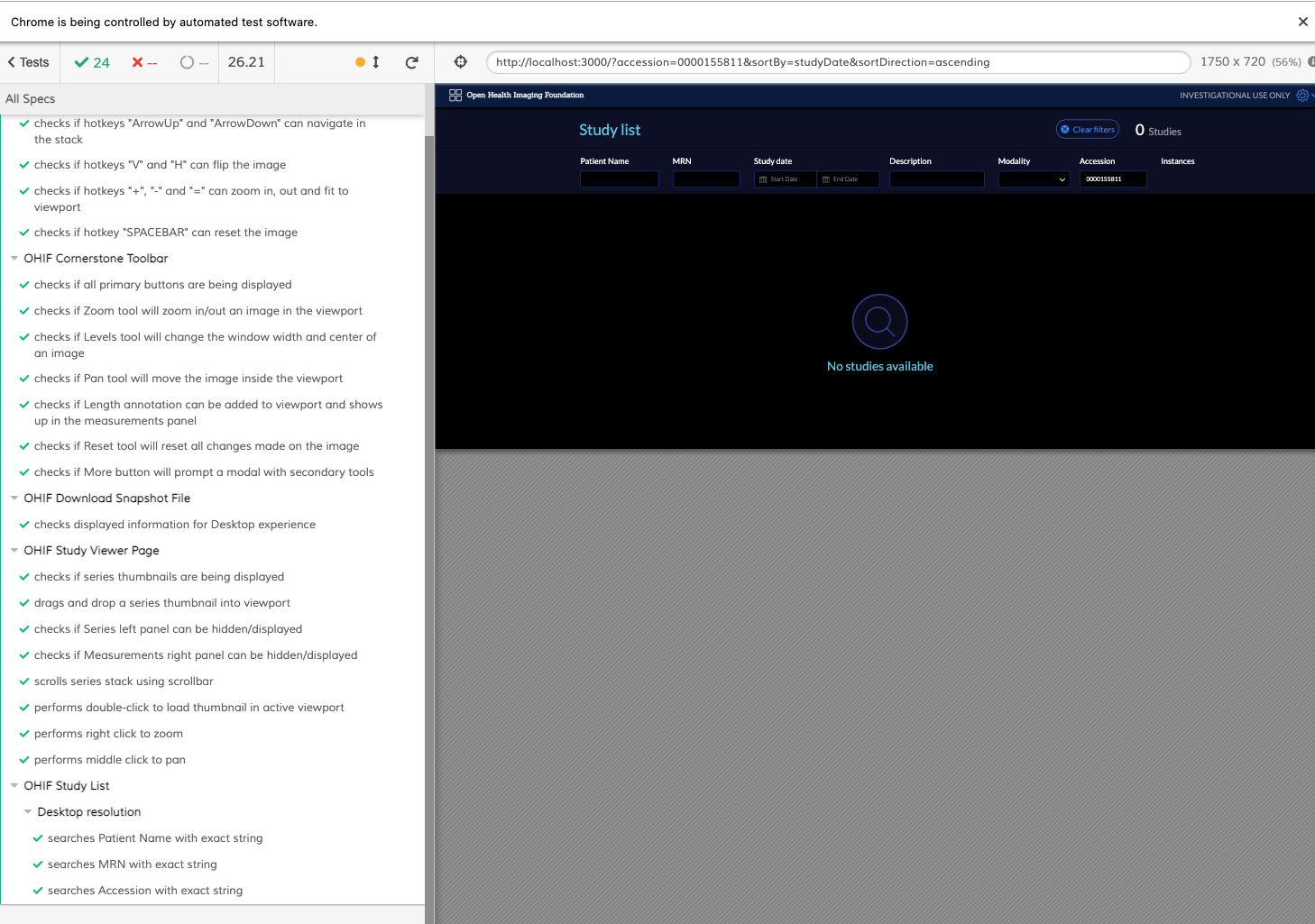

Run the tests by clicking on the

Run #number integration tests.A new window will open, and you will see e2e tests being executed one after each other.

Test Data

The testing data is stored in two OHIF repositories. The first contains the binary DICOM data, at viewer-testdata while the second module contains data in the DICOMweb format, installed as a submodule into OHIF in the

testdatadirectory. This is retrieved via the commandyarn test:dataor the equivalent command

git submodule update --initWhen adding new data, run:npm install -g dicomp10-to-dicomweb

mkdicomweb -d dicomweb dcmto update the local dicomweb submodule in viewer-testdata. Then, commit that data and update the submodules used in OHIF and in the viewer-testdata parent modules.

All data MUST be fully anonymized and allowed to be used for open access. Any attributions should be included in the DCM directory.

Testing Philosophy

Testing is an opinionated topic. Here is a rough overview of our testing philosophy. See something you want to discuss or think should be changed? Open a PR and let's discuss.

You're an engineer. You know how to write code, and writing tests isn't all that different. But do you know why we write tests? Do you know when to write one, or what kind of test to write? How do you know if a test is a "good" test? This document's goal is to give you the tools you need to make those determinations.

Okay. So why do we write tests? To increase our... CONFIDENCE

- If I do a large refactor, does everything still work?

- If I changed some critical piece of code, is it safe to push to production?

Gaining the confidence we need to answer these questions after every change is costly. Good tests allow us to answer them without manual regression testing. What and how we choose to test to increase that confidence is nuanced.

Further Reading: Kinds of Tests

Test's buy us confidence, but not all tests are created equal. Each kind of test has a different cost to write and maintain. An expensive test is worth it if it gives us confidence that a payment is processed, but it may not be the best choice for asserting an element's border color.

| Test Type | Example | Speed | Cost |

|---|---|---|---|

| Static | addNums(1, '2') called with string, expected int. | 🚀 Instant | 💸 |

| Unit | addNums(1, 2) returns expected result 3 | ✈️ Fast | 💸💸 |

| Integration | Clicking "Sign In", navigates to the dashboard (mocked network requests) | 🏃♂️ Okay | 💸💸💸 |

| End-to-end | Clicking "Sign In", navigates to the dashboard (no mocks) | 🐢 Slow | 💸💸💸💸 |

- 🚀 Speed: How quickly tests run

- 💸 Cost: Time to write, and to debug when broken (more points of failure)

Static Code Analysis

Modern tooling gives us this "for free". It can catch invalid regular expressions, unused variables, and guarantee we're calling methods/functions with the expected parameter types.

Example Tooling:

Unit Tests

The building blocks of our libraries and applications. For these, you'll often be testing a single function or method. Conceptually, this equates to:

Pure Function Test:

- If I call

sum(2, 2), I expect the output to be4

Side Effect Test:

- If I call

resetViewport(viewport), I expectcornerstone.resetto be called withviewport

When to use

Anything that is exposed as public API should have unit tests.

When to avoid

You're actually testing implementation details. You're testing implementation details if:

- Your test does something that the consumer of your code would never do.

- IE. Using a private function

- A refactor can break your tests

Integration Tests

We write integration tests to gain confidence that several units work together. Generally, we want to mock as little as possible for these tests. In practice, this means only mocking network requests.

End-to-End Tests

These are the most expensive tests to write and maintain. Largely because, when they fail, they have the largest number of potential points of failure. So why do we write them? Because they also buy us the most confidence.

When to use

Mission critical features and functionality, or to cover a large breadth of

functionality until unit tests catch up. Unsure if we should have a test for

feature X or scenario Y? Open an issue and let's discuss.

General

- Assert(js) Conf 2018 Talks

- Write tests. Not too many. Mostly integration. - Kent C. Dodds

- I see your point, but… - Gleb Bahmutov

- Static vs Unit vs Integration vs E2E Testing - Kent C. Dodds (Blog)

End-to-end Testing w/ Cypress

- Getting Started

- Be sure to check out

Getting StartedandCore Concepts

- Be sure to check out

- Best Practices

- Example Recipes